Chapter 2 Overview of surveys

2.1 Introduction

Developing surveys to gather accurate information about populations involves an intricate and time-intensive process. Researchers can spend months, or even years, developing the study design, questions, and other methods for a single survey to ensure high-quality data is collected.

Before analyzing survey data, we recommend understanding the entire survey life cycle. This understanding can provide better insight into what types of analyses should be conducted on the data. The survey life cycle consists of the necessary stages to execute a survey project successfully. Each stage influences the survey’s timing, costs, and feasibility, consequently impacting the data collected and how we should analyze them. Figure 2.1 shows a high-level overview of the survey process.

FIGURE 2.1: Overview of the survey process

The survey life cycle starts with a research topic or question of interest (e.g., the impact that childhood trauma has on health outcomes later in life). Drawing from available resources can result in a reduced burden on respondents, lower costs, and faster research outcomes. Therefore, we recommend reviewing existing data sources to determine if data that can address this question are already available. However, if existing data cannot answer the nuances of the research question, we can capture the exact data we need through a questionnaire, or a set of questions.

To gain a deeper understanding of survey design and implementation, we recommend reviewing several pieces of existing literature in detail (e.g., Biemer and Lyberg 2003; Bradburn, Sudman, and Wansink 2004; Dillman, Smyth, and Christian 2014; Groves et al. 2009; Tourangeau, Rips, and Rasinski 2000; Valliant, Dever, and Kreuter 2013).

2.2 Searching for public-use survey data

Throughout this book, we use public-use datasets from different surveys, including the American National Election Studies (ANES), the Residential Energy Consumption Survey (RECS), the National Crime Victimization Survey (NCVS), and the AmericasBarometer surveys.

As mentioned above, we should look for existing data that can provide insights into our research questions before embarking on a new survey. One of the greatest sources of data is the government. For example, in the U.S., we can get data directly from the various statistical agencies such as the U.S. Energy Information Administration or Bureau of Justice Statistics. Other countries often have data available through official statistics offices, such as the Office for National Statistics in the United Kingdom.

In addition to government data, many researchers make their data publicly available through repositories such as the Inter-university Consortium for Political and Social Research (ICPSR) or the Odum Institute Data Archive. Searching these repositories or other compiled lists (e.g., Analyze Survey Data for Free) can be an efficient way to identify surveys with questions related to our research topic.

2.3 Pre-survey planning

There are multiple things to consider when starting a survey. Errors are the differences between the true values of the variables being studied and the values obtained through the survey. Each step and decision made before the launch of the survey impact the types of errors that are introduced into the data, which in turn impact how to interpret the results.

Generally, survey researchers consider there to be seven main sources of error that fall under either Representation or Measurement (Groves et al. 2009):

- Representation

- Coverage Error: A mismatch between the population of interest and the sampling frame, the list from which the sample is drawn.

- Sampling Error: Error produced when selecting a sample, the subset of the population, from the sampling frame. This error is due to randomization, and we discuss how to quantify this error in Chapter 10. There is no sampling error in a census, as there is no randomization. The sampling error measures the difference between all potential samples under the same sampling method.

- Nonresponse Error: Differences between those who responded and did not respond to the survey (unit nonresponse) or a given question (item nonresponse).

- Adjustment Error: Error introduced during post-survey statistical adjustments.

- Measurement

- Validity: A mismatch between the research topic and the question(s) used to collect that information.

- Measurement Error: A mismatch between what the researcher asked and how the respondent answered.

- Processing Error: Edits by the researcher to responses provided by the respondent (e.g., adjustments to data based on illogical responses).

Almost every survey has errors. Researchers attempt to conduct a survey that reduces the total survey error, or the accumulation of all errors that may arise throughout the survey life cycle. By assessing these different types of errors together, researchers can seek strategies to maximize the overall survey quality and improve the reliability and validity of results (Biemer 2010). However, attempts to reduce individual source errors (and therefore total survey error) come at the price of time and money. For example:

- Coverage Error Tradeoff: Researchers can search for or create more accurate and updated sampling frames, but they can be difficult to construct or obtain.

- Sampling Error Tradeoff: Researchers can increase the sample size to reduce sampling error; however, larger samples can be expensive and time-consuming to field.

- Nonresponse Error Tradeoff: Researchers can increase or diversify efforts to improve survey participation, but this may be resource-intensive while not entirely removing nonresponse bias.

- Adjustment Error Tradeoff: Weighting is a statistical technique used to adjust the contribution of individual survey responses to the final survey estimates. It is typically done to make the sample more representative of the population of interest. However, if researchers do not carefully execute the adjustments or base them on inaccurate information, they can introduce new biases, leading to less accurate estimates.

- Validity Error Tradeoff: Researchers can increase validity through a variety of ways, such as using established scales or collaborating with a psychometrician during survey design to pilot and evaluate questions. However, doing so increases the amount of time and resources needed to complete survey design.

- Measurement Error Tradeoff: Researchers can use techniques such as questionnaire testing and cognitive interviewing to ensure respondents are answering questions as expected. However, these activities require time and resources to complete.

- Processing Error Tradeoff: Researchers can impose rigorous data cleaning and validation processes. However, this requires supervision, training, and time.

The challenge for survey researchers is to find the optimal tradeoffs among these errors. They must carefully consider ways to reduce each error source and total survey error while balancing their study’s objectives and resources.

For survey analysts, understanding the decisions that researchers took to minimize these error sources can impact how results are interpreted. The remainder of this chapter explores critical considerations for survey development. We explore how to consider each of these sources of error and how these error sources can inform the interpretations of the data.

2.4 Study design

From formulating methodologies to choosing an appropriate sampling frame, the study design phase is where the blueprint for a successful survey takes shape. Study design encompasses multiple parts of the survey life cycle, including decisions on the population of interest, survey mode (the format through which a survey is administered to respondents), timeline, and questionnaire design. Knowing who and how to survey individuals depends on the study’s goals and the feasibility of implementation. This section explores the strategic planning that lays the foundation for a survey.

2.4.1 Sampling design

The set or group we want to survey is known as the population of interest or the target population. The population of interest could be broad, such as “all adults age 18+ living in the U.S.” or a specific population based on a particular characteristic or location. For example, we may want to know about “adults aged 18–24 who live in North Carolina” or “eligible voters living in Illinois.”

However, a sampling frame with contact information is needed to survey individuals in these populations of interest. If we are looking at eligible voters, the sampling frame could be the voting registry for a given state or area. If we are looking at more broad populations of interest, like all adults in the United States, the sampling frame is likely imperfect. In these cases, a full list of individuals in the United States is not available for a sampling frame. Instead, we may choose to use a sampling frame of mailing addresses and send the survey to households, or we may choose to use random digit dialing (RDD) and call random phone numbers (that may or may not be assigned, connected, and working).

These imperfect sampling frames can result in coverage error where there is a mismatch between the population of interest and the list of individuals we can select. For example, if we are looking to obtain estimates for “all adults aged 18+ living in the U.S.,” a sampling frame of mailing addresses will miss specific types of individuals, such as the homeless, transient populations, and incarcerated individuals. Additionally, many households have more than one adult resident, so we would need to consider how to get a specific individual to fill out the survey (called within household selection) or adjust the population of interest to report on “U.S. households” instead of “individuals.”

Once we have selected the sampling frame, the next step is determining how to select individuals for the survey. In rare cases, we may conduct a census and survey everyone on the sampling frame. However, the ability to implement a questionnaire at that scale is something only a few can do (e.g., government censuses). Instead, we typically choose to sample individuals and use weights to estimate numbers in the population of interest. They can use a variety of different sampling methods, and more information on these can be found in Chapter 10. This decision of which sampling method to use impacts sampling error and can be accounted for in weighting.

Example: Number of pets in a household

Let’s use a simple example where we are interested in the average number of pets in a household. We need to consider the population of interest for this study. Specifically, are we interested in all households in a given country or households in a more local area (e.g., city or state)? Let’s assume we are interested in the number of pets in a U.S. household with at least one adult (18 years or older). In this case, a sampling frame of mailing addresses would introduce only a small amount of coverage error as the frame would closely match our population of interest. Specifically, we would likely want to use the Computerized Delivery Sequence File (CDSF), which is a file of mailing addresses that the United States Postal Service (USPS) creates and covers nearly 100% of U.S. households (Harter et al. 2016). To sample these households, for simplicity, we use a stratified simple random sample design (see Chapter 10 for more information on sample designs), where we randomly sample households within each state (i.e., we stratify by state).

Throughout this chapter, we build on this example research question to plan a survey.

2.4.2 Data collection planning

With the sampling design decided, researchers can then decide how to survey these individuals. Specifically, the modes used for contacting and surveying the sample, how frequently to send reminders and follow-ups, and the overall timeline of the study are some of the major data collection determinations. Traditionally, survey researchers have considered there to be four main modes1:

- Computer-Assisted Personal Interview (CAPI; also known as face-to-face or in-person interviewing)

- Computer-Assisted Telephone Interview (CATI; also known as phone or telephone interviewing)

- Computer-Assisted Web Interview (CAWI; also known as web or online interviewing)

- Paper and Pencil Interview (PAPI)

We can use a single mode to collect data or multiple modes (also called mixed-modes). Using mixed-modes can allow for broader reach and increase response rates depending on the population of interest (Biemer et al. 2017; DeLeeuw 2005, 2018). For example, we could both call households to conduct a CATI survey and send mail with a PAPI survey to the household. By using both modes, we could gain participation through the mail from individuals who do not pick up the phone to unknown numbers or through the phone from individuals who do not open all of their mail. However, mode effects (where responses differ based on the mode of response) can be present in the data and may need to be considered during analysis.

When selecting which mode, or modes, to use, understanding the unique aspects of the chosen population of interest and sampling frame provides insight into how they can best be reached and engaged. For example, if we plan to survey adults aged 18–24 who live in North Carolina, asking them to complete a survey using CATI (i.e., over the phone) would likely not be as successful as other modes like the web. This age group does not talk on the phone as much as other generations and often does not answer phone calls from unknown numbers. Additionally, the mode for contacting respondents relies on what information is available in the sampling frame. For example, if our sampling frame includes an email address, we could email our selected sample members to convince them to complete a survey. Alternatively, if the sampling frame is a list of mailing addresses, we could contact sample members with a letter.

It is important to note that there can be a difference between the contact and survey modes. For example, if we have a sampling frame with addresses, we can send a letter to our sample members and provide information on completing a web survey. Another option is using mixed-mode surveys by mailing sample members a paper and pencil survey but also including instructions to complete the survey online. Combining different contact modes and different survey modes can be helpful in reducing unit nonresponse error–where the entire unit (e.g., a household) does not respond to the survey at all–as different sample members may respond better to different contact and survey modes. However, when considering which modes to use, it is important to make access to the survey as easy as possible for sample members to reduce burden and unit nonresponse.

Another way to reduce unit nonresponse error is by varying the language of the contact materials (Dillman, Smyth, and Christian 2014). People are motivated by different things, so constantly repeating the same message may not be helpful. Instead, mixing up the messaging and the type of contact material the sample member receives can increase response rates and reduce the unit nonresponse error. For example, instead of only sending standard letters, we could consider sending mailings that invoke “urgent” or “important” thoughts by sending priority letters or using other delivery services like FedEx, UPS, or DHL.

A study timeline may also determine the number and types of contacts. If the timeline is long, there is plentiful time for follow-ups and diversified messages in contact materials. If the timeline is short, then fewer follow-ups can be implemented. Many studies start with the tailored design method put forth by Dillman, Smyth, and Christian (2014) and implement five contacts:

- Pre-notification (Pre-notice) to let sample members know the survey is coming

- Invitation to complete the survey

- Reminder to also thank the respondents who have already completed the survey

- Reminder (with a replacement paper survey if needed)

- Final reminder

This method is easily adaptable based on the study timeline and needs but provides a starting point for most studies.

Example: Number of pets in a household

Let’s return to our example of the average number of pets in a household. We are using a sampling frame of mailing addresses, so we recommend starting our data collection with letters mailed to households, but later in data collection, we want to send interviewers to the house to conduct an in-person (or CAPI) interview to decrease unit nonresponse error. This means we have two contact modes (paper and in-person). As mentioned above, the survey mode does not have to be the same as the contact mode, so we recommend a mixed-mode study with both web and CAPI modes. Let’s assume we have 6 months for data collection, so we could recommend Table 2.1’s protocol:

| Week | Contact Mode | Contact Message | Survey Mode Offered |

|---|---|---|---|

| 1 | Mail: Letter | Pre-notice | — |

| 2 | Mail: Letter | Invitation | Web |

| 3 | Mail: Postcard | Thank You/Reminder | Web |

| 6 | Mail: Letter in large envelope | Animal Welfare Discussion | Web |

| 10 | Mail: Postcard | Inform Upcoming In-Person Visit | Web |

| 14 | In-Person Visit | — | CAPI |

| 16 | Mail: Letter | Reminder of In-Person Visit | Web, but includes a number to call to schedule CAPI |

| 20 | In-Person Visit | — | CAPI |

| 25 | Mail: Letter in large envelope | Survey Closing Notice | Web, but includes a number to call to schedule CAPI |

This is just one possible protocol that we can use that starts respondents with the web (typically done to reduce costs). However, we could begin in-person data collection earlier during the data collection period or ask interviewers to attempt more than two visits with a household.

2.4.3 Questionnaire design

When developing the questionnaire, it can be helpful to first outline the topics to be asked and include the “why” each question or topic is important to the research question(s). This can help us better tailor the questionnaire and reduce the number of questions (and thus the burden on the respondent) if topics are deemed irrelevant to the research question. When making these decisions, we should also consider questions needed for weighting. While we would love to have everyone in our population of interest answer our survey, this rarely happens. Thus, including questions about demographics in the survey can assist with weighting for nonresponse errors (both unit and item nonresponse). Knowing the details of the sampling plan and what may impact coverage error and sampling error can help us determine what types of demographics to include. Thus questionnaire design is typically done in conjunction with sampling design.

We can benefit from the work of others by using questions from other surveys. Demographic sections in surveys, such as race, ethnicity, or education, often are borrowed questions from a government census or other official surveys. Question banks such as the ICPSR variable search can provide additional potential questions.

If a question does not exist in a question bank, we can craft our own. When developing survey questions, we should start with the research topic and attempt to write questions that match the concept. The closer the question asked is to the overall concept, the better validity there is. For example, if we want to know how people consume T.V. series and movies but only ask a question about how many T.V.s are in the house, then we would be missing other ways that people watch T.V. series and movies, such as on other devices or at places outside of the home. As mentioned above, we can employ techniques to increase the validity of questionnaires. For example, questionnaire testing involves piloting the survey instrument to identify and fix potential issues before conducting the main survey. Additionally, we could conduct cognitive interviews – a technique where we walk through the survey with participants, encouraging them to speak their thoughts out loud to uncover how they interpret and understand survey questions.

Additionally, when designing questions, we should consider the mode for the survey and adjust the language appropriately. In self-administered surveys (e.g., web or mail), respondents can see all the questions and response options, but that is not the case in interviewer-administered surveys (e.g., CATI or CAPI). With interviewer-administered surveys, the response options must be read aloud to the respondents, so the question may need to be adjusted to create a better flow to the interview. Additionally, with self-administered surveys, because the respondents are viewing the questionnaire, the formatting of the questions is even more critical to ensure accurate measurement. Incorrect formatting or wording can result in measurement error, so following best practices or using existing validated questions can reduce error. There are multiple resources to help researchers draft questions for different modes (e.g., Bradburn, Sudman, and Wansink 2004; Dillman, Smyth, and Christian 2014; Fowler and Mangione 1989; Tourangeau, Couper, and Conrad 2004).

Example: Number of pets in a household

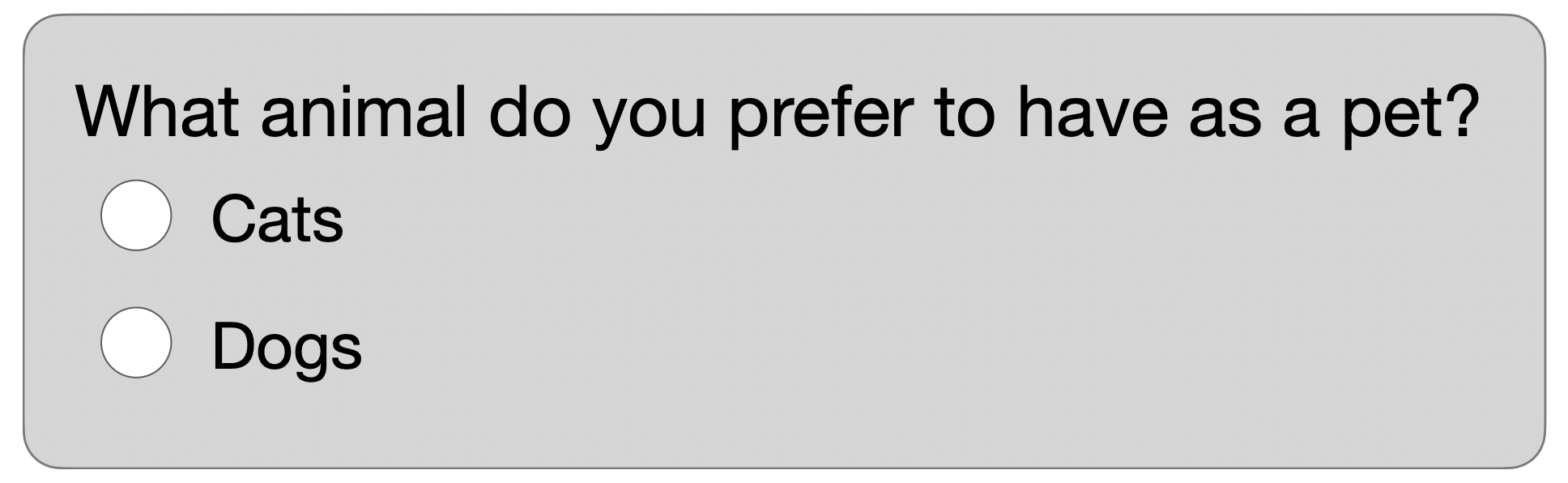

As part of our survey on the average number of pets in a household, we may want to know what animal most people prefer to have as a pet. Let’s say we have a question in our survey as displayed in Figure 2.2.

FIGURE 2.2: Example question asking pet preference type

This question may have validity issues as it only provides the options of “dogs” and “cats” to respondents, and the interpretation of the data could be incorrect. For example, if we had 100 respondents who answered the question and 50 selected dogs, then the results of this question cannot be “50% of the population prefers to have a dog as a pet,” as only two response options were provided. If a respondent taking our survey prefers turtles, they could either be forced to choose a response between these two (i.e., interpret the question as “between dogs and cats, which do you prefer?” and result in measurement error), or they may not answer the question (which results in item nonresponse error). Based on this, the interpretation of this question should be, “When given a choice between dogs and cats, 50% of respondents preferred to have a dog as a pet.”

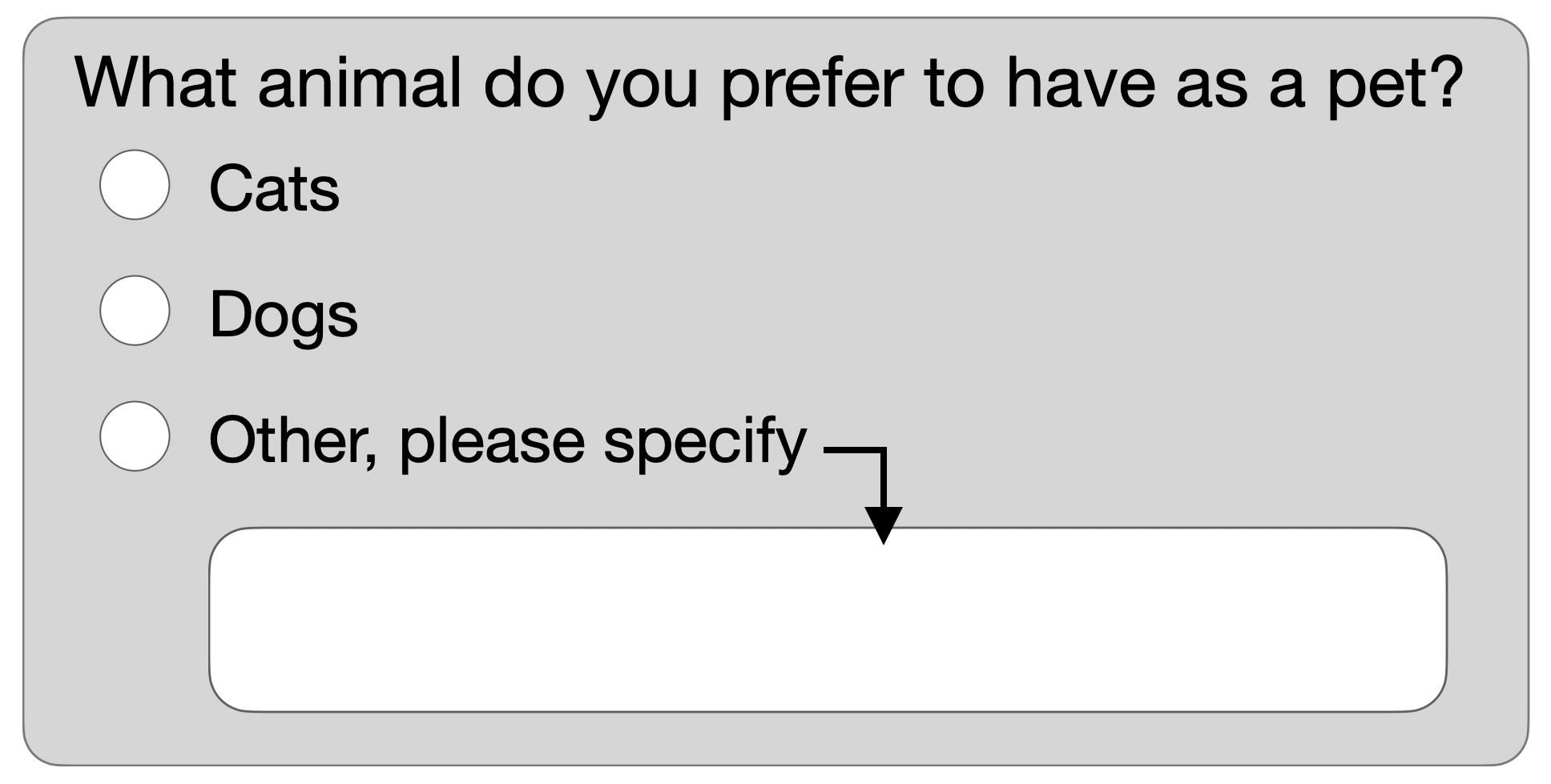

To avoid this issue, we should consider these possibilities and adjust the question accordingly. One simple way could be to add an “other” response option to give respondents a chance to provide a different response. The “other” response option could then include a way for respondents to write their other preference. For example, we could rewrite this question as displayed in Figure 2.3.

FIGURE 2.3: Example question asking pet preference type with other specify option

We can then code the responses from the open-ended box and get a better understanding of the respondent’s choice of preferred pet. Interpreting this question becomes easier as researchers no longer need to qualify the results with the choices provided.

This is a simple example of how the presentation of the question and options can impact the findings. For more complex topics and questions, we must thoroughly consider how to mitigate any impacts from the presentation, formatting, wording, and other aspects. For survey analysts, reviewing not only the data but also the wording of the questions is crucial to ensure the results are presented in a manner consistent with the question asked. Chapter 3 provides further details on how to review existing survey documentation to inform our analyses, and Chapter 8 goes into more details on communicating results.

2.5 Data collection

Once the data collection starts, we try to stick to the data collection protocol designed during pre-survey planning. However, effective researchers also prepare to adjust their plans and adapt as needed to the current progress of data collection (Schouten, Peytchev, and Wagner 2018). Some extreme examples could be natural disasters that could prevent mailings or interviewers from getting to the sample members. This could cause an in-person survey needing to quickly pivot to a self-administered survey, or the field period could be delayed, for example. Others could be smaller in that something newsworthy occurs connected to the survey, so we could choose to play this up in communication materials. In addition to these external factors, there could be factors unique to the survey, such as lower response rates for a specific subgroup, so the data collection protocol may need to find ways to improve response rates for that specific group.

2.6 Post-survey processing

After data collection, various activities need to be completed before we can analyze the survey. Multiple decisions made during this post-survey phase can assist us in reducing different error sources, such as weighting to account for the sample selection. Knowing the decisions made in creating the final analytic data can impact how we use the data and interpret the results.

2.6.1 Data cleaning and imputation

Post-survey cleaning is one of the first steps we do to get the survey responses into an analytic dataset. Data cleaning can consist of correcting inconsistent data (e.g., with skip pattern errors or multiple questions throughout the survey being consistent with each other), editing numeric entries or open-ended responses for grammar and consistency, or recoding open-ended questions into categories for analysis. There is no universal set of fixed rules that every survey must adhere to. Instead, each survey or research study should establish its own guidelines and procedures for handling various cleaning scenarios based on its specific objectives.

We should use our best judgment to ensure data integrity, and all decisions should be documented and available to those using the data in the analysis. Each decision we make impacts processing error, so often, multiple people review these rules or recode open-ended data and adjudicate any differences in an attempt to reduce this error.

Another crucial step in post-survey processing is imputation. Often, there is item nonresponse where respondents do not answer specific questions. If the questions are crucial to analysis efforts or the research question, we may implement imputation to reduce item nonresponse error. Imputation is a technique for replacing missing or incomplete data values with estimated values. However, as imputation is a way of assigning values to missing data based on an algorithm or model, it can also introduce processing error, so we should consider the overall implications of imputing data compared to having item nonresponse. There are multiple ways to impute data. We recommend reviewing other resources like Kim and Shao (2021) for more information.

Example: Number of pets in a household

Let’s return to the question we created to ask about animal preference. The “other specify” invites respondents to specify the type of animal they prefer to have as a pet. If respondents entered answers such as “puppy,” “turtle,” “rabit,” “rabbit,” “bunny,” “ant farm,” “snake,” “Mr. Purr,” then we may wish to categorize these write-in responses to help with analysis. In this example, “puppy” could be assumed to be a reference to a “Dog” and could be recoded there. The misspelling of “rabit” could be coded along with “rabbit” and “bunny” into a single category of “Bunny or Rabbit.” These are relatively standard decisions that we can make. The remaining write-in responses could be categorized in a few different ways. “Mr. Purr,” which may be someone’s reference to their own cat, could be recoded as “Cat,” or it could remain as “Other” or some category that is “Unknown.” Depending on the number of responses related to each of the others, they could all be combined into a single “Other” category, or maybe categories such as “Reptiles” or “Insects” could be created. Each of these decisions may impact the interpretation of the data, so we should document the types of responses that fall into each of the new categories and any decisions made.

2.6.2 Weighting

We can address some error sources identified in the previous sections using weighting. During the weighting process, weights are created for each respondent record. These weights allow the survey responses to generalize to the population. A weight, generally, reflects how many units in the population each respondent represents. Often, the weight is constructed such that the sum of the weights is the size of the population.

Weights can address coverage, sampling, and nonresponse errors. Many published surveys include an “analysis weight” variable that combines these adjustments. However, weighting itself can also introduce adjustment error, so we need to balance which types of errors should be corrected with weighting. The construction of weights is outside the scope of this book, we recommend referencing other materials if interested in weight construction (Valliant and Dever 2018). Instead, this book assumes the survey has been completed, weights are constructed, and data are available to users.

Example: Number of pets in a household

In the simple example of our survey, we decided to obtain a random sample from each state to select our sample members. Knowing this sampling design, we can include selection weights for analysis that account for how the sample members were selected for the survey. Additionally, the sampling frame may have the type of building associated with each address, so we could include the building type as a potential nonresponse weighting variable, along with some interviewer observations that may be related to our research topic of the average number of pets in a household. Combining these weights, we can create an analytic weight that analysts need to use when analyzing the data.

2.6.3 Disclosure

Before data is released publicly, we need to ensure that individual respondents cannot be identified by the data when confidentiality is required. There are a variety of different methods that can be used. Here we describe a few of the most commonly used:

- Data swapping: We may swap specific data values across different respondents so that it does not impact insights from the data but ensures that specific individuals cannot be identified.

- Top/bottom coding: We may choose top or bottom coding to mask extreme values. For example, we may top-code income values such that households with income greater than $500,000 are coded as “$500,000 or more” with other incomes being presented as integers between $0 and $499,999. This can impact analyses at the tails of the distribution.

- Coarsening: We may use coarsening to mask unique values. For example, a survey question may ask for a precise income but the public data may include income as a categorical variable. Another example commonly used in survey practice is to coarsen geographic variables. Data collectors likely know the precise address of sample members, but the public data may only include the state or even region of respondents.

- Perturbation: We may add random noise to outcomes. As with swapping, this is done so that it does not impact insights from the data but ensures that specific individuals cannot be identified.

There is as much art as there is science to the methods used for disclosure. Only high-level comments about the disclosure are provided in the survey documentation, not specific details. This ensures nobody can reverse the disclosure and thus identify individuals. For more information on different disclosure methods, please see Skinner (2009) and the AAPOR Standards.

2.6.4 Documentation

Documentation is a critical step of the survey life cycle. We should systematically record all the details, decisions, procedures, and methodologies to ensure transparency, reproducibility, and the overall quality of survey research.

Proper documentation allows analysts to understand, reproduce, and evaluate the study’s methods and findings. Chapter 3 dives into how analysts should use survey data documentation.

2.7 Post-survey data analysis and reporting

After completing the survey life cycle, the data are ready for analysts. Chapter 4 continues from this point. For more information on the survey life cycle, please explore the references cited throughout this chapter.

References

Other modes such as using mobile apps or text messaging can also be considered, but at the time of publication, they have smaller reach or are better for longitudinal studies (i.e., surveying the same individuals over many time periods of a single study).↩︎